tip

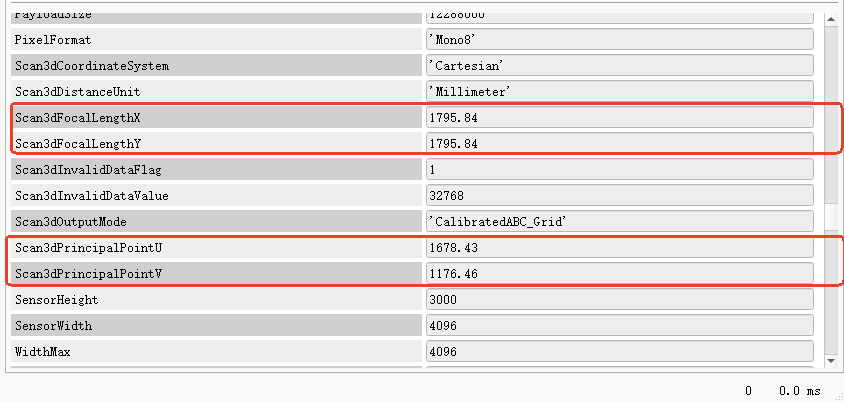

As shown above, after Mech Eye 2.4.0, camera parameters can be read directly in Halcon without API conversion.

1. Obtaining Mech-Eye Industrial 3D Cameras intrinsic parameters

Intrinsic parameters of Mech-Eye Industrial 3D Cameras can be acquired using the ‘getDeviceIntri’ interface in the API. You can refer to this sample for specific usage instructions:

GitHub Mech-Eye API GetCameraIntri

2. Printed intrinsic parameters

Connect Mech-Eye Successfully.

CameraDistCoeffs: k1: 0.0, k2: 0.0, p1: 0.0, p2: 0.0, k3: 0.0

DepthDistCoeffs: k1: 0.0, k2: 0.0, p1: 0.0, p2: 0.0, k3: 0.0

name: CameraMatrix

[2552.997597580249, 2552.997597580249

1587.164794921875, 1569.4437408447266]

name: DepthMatrix

[1276.4987987901245, 1276.4987987901245

793.3323974609375, 784.4718704223633]

Disconnected from the Mech-Eye device successfully.

3. Converting Mech-Eye Industrial 3D Cameras intrinsic parameters to Halcon ones

Halcon provides the “cam_mat_to_cam_par” operator for conversion, allowing you to convert data from DepthMatrix into CameraParam required by Halcon. This operator is described as follows:

cam_mat_to_cam_par( : : DepthMatrix, Kappa, ImageWidth, ImageHeight : CameraParam)

For detailed usage instructions, please refer to: cam_mat_to_cam_par (Operator).

In the operator “cam_mat_to_cam_par”, the format of DepthMatrix is as follows:

[ fx 0 cx

0 fy cy

0 0 1]

While in Mech-Eye SDK, it is printed as:

- Mech-Eye SDK version 2.0.2 or later:

[ fx 0 cx

0 fy cy

0 0 1]

- Mech-Eye SDK version 2.0.0:

[ fx, fy

cx, cy]

By inputting acquired DepthMatrix (please pay attention to the corresponding relationships) and the actual dimensions of the image in Halcon, you can obtain the Halcon-formatted CameraParam:

cam_mat_to_cam_par ([1276.4987987901245, 0., 793.3323974609375,0., 1276.4987987901245, 784.4718704223633, 0., 0., 1.], 0, 1280, 1024, CameraParam)

Important notes

- Please be aware that there may be variations in the intrinsic parameters between 2D images and depth maps in certain camera models. Pay attention to the types of inputted camera intrinsic parameters.

- To maintain data type consistency, input floating-point data into DepthMatrix.

- All images output from Mech-Eye API have undergone distortion correction, so the distortion coefficients are all set to 0.