This post introduces how to use super model packages to recognize boxes and sacks.

1. Get super model package

2. Deploy super model package in Mech-Vision

3. Check recognition effect

4. Finetune super models in Mech-DLK (optional)

Note: Finetuning super models within Mech-DLK is necessary if only the recognition results are unsatisfactory.

The super model package is a Mech-Mind’s common model for recognizing boxes or sacks. If the recognition results are unsatisfactory, you can finetune the model in Mech-DLK.

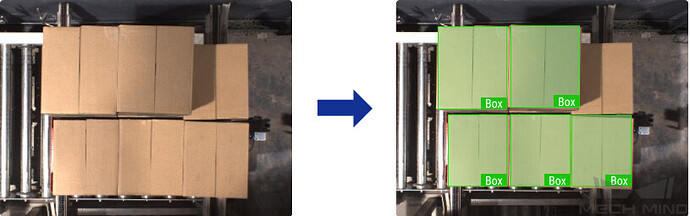

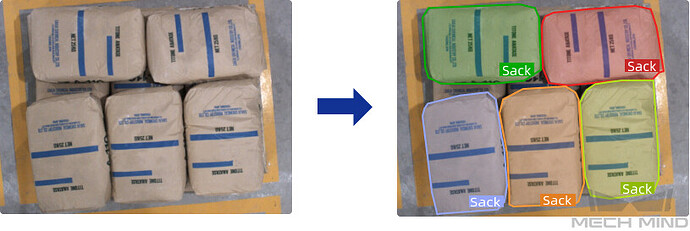

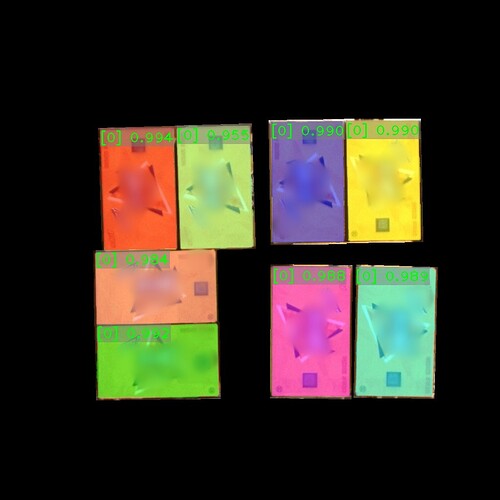

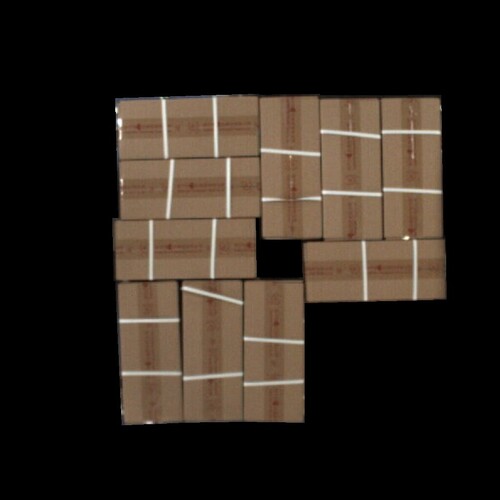

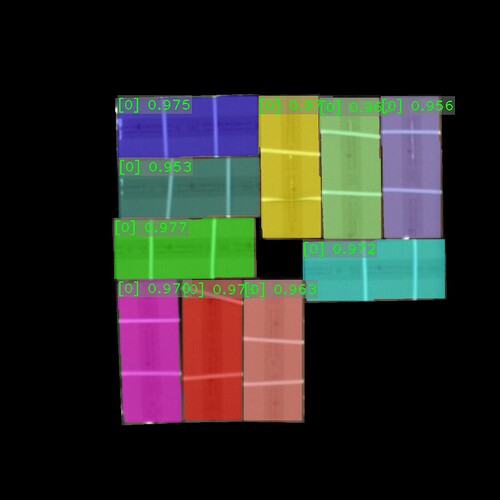

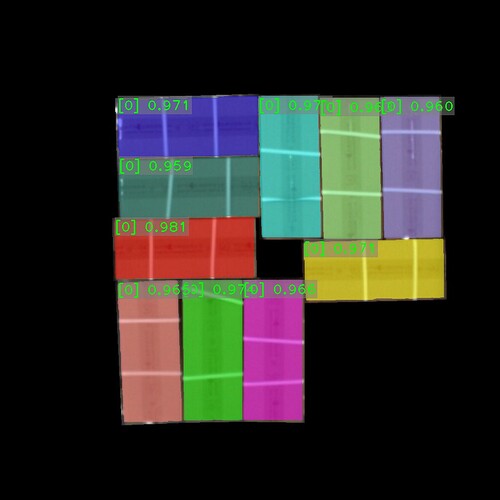

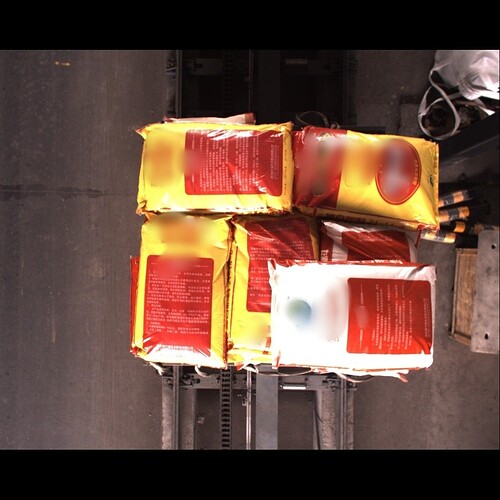

The final locating and recognition results are in the images below. On the left are 2D images of the boxes and sacks, while the images on the right showcase the outcomes of the deep learning recognition process.

Get super model package

Mech-Vision needs super model packages for inference to accurately recognize boxes or sacks. Therefore, it is essential to obtain the specific model packages designed for box or sack recognition.

Navigate to the Download Center and download the super model packages compatible with Mech-Vision.

Note:

- Recognition accuracy: Compared to CPU model, GPU model can recognize more accurate object profile.

- Calculation speed: In laboratory test (Intel Core 12th i5-12400 vs NVIDIA GTX 1050Ti), the inference speed of the CPU model is generally same as that of the GPU model.

Deploy super model package in Mech-Vision

Import super model package

Import the super model package into Deep Learning Model Package Management Tool.

Use super model packages for inference

Utilizing the Step Deep Learning Model Package Inference, employ the imported super model package to perform inference on images of boxes or sacks.

Note: Model Package Inference refers to the process in which a trained deep learning model is used to make inferences on data, enabling the accomplishment of various tasks such as image classification and instance segmentation.

Check recognition effect

Once the Step “Deep Learning Model Package Inference” is complete, you can review the recognition results in Mech-Vision.

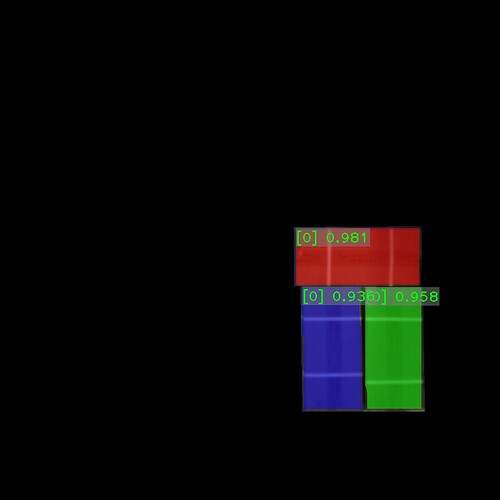

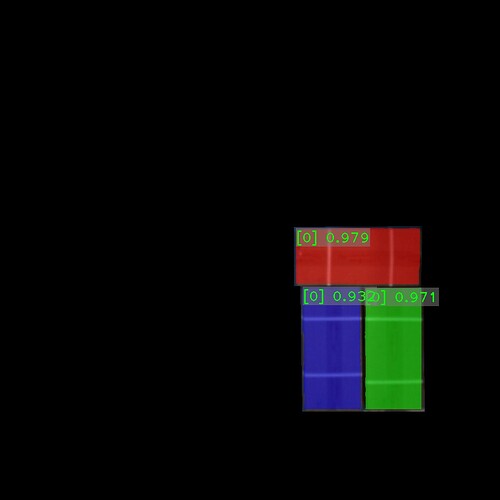

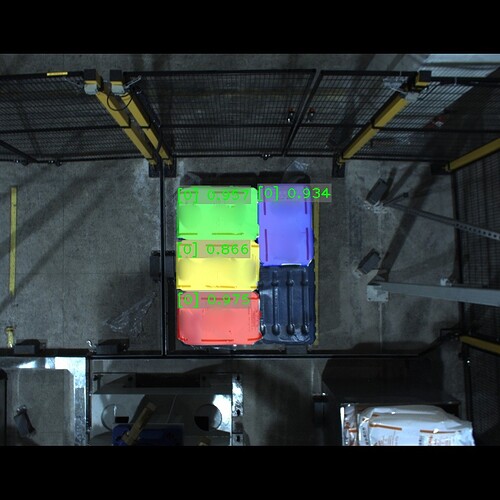

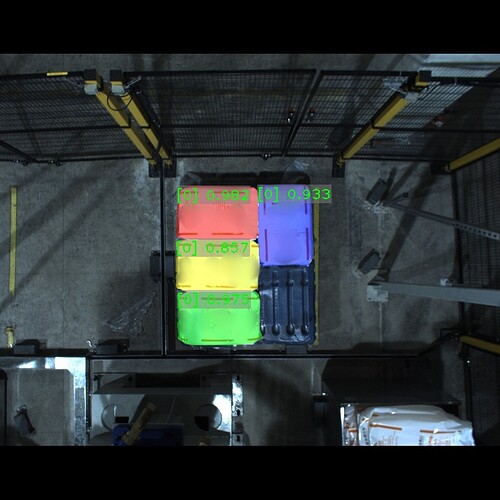

Demo on boxes

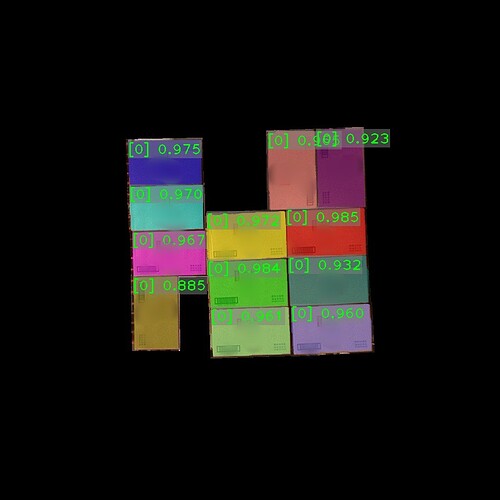

Click to enlarge image. The number indicates confidence, namely the recognition performance score.

The edge profile of color masks is the recognized box edge profile. The edge profile of box super model recognition result achieves a high degree of fitting.

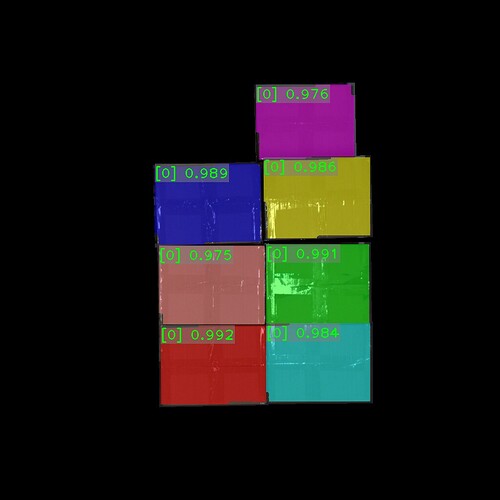

Demo on sacks

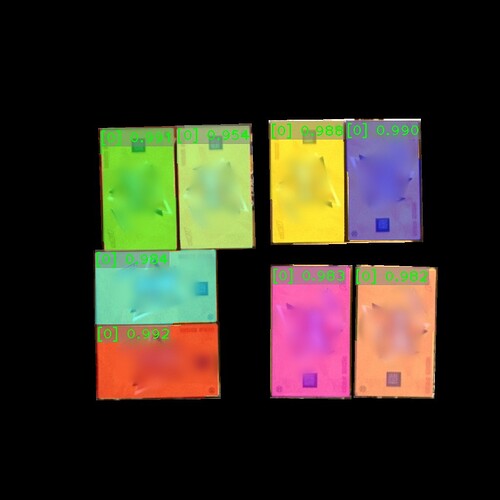

Click to enlarge image. The number indicates confidence, namely the recognition performance score.

The edge profile of color masks is the recognized sack edge profile. The edge profile of sack super model recognition result achieves a high degree of fitting.

Finetune super models in Mech-DLK

If the recognition results for boxes or sacks are satisfactory, finetuning the super models is unnecessary.

If the recognition results for boxes or sacks are unsatisfactory, finetuning the super models in Mech-DLK is required.

Get super models

Since Mech-DLK does not support finetuning directly on super model packages, it is necessary to obtain the corresponding super model for the model package.

Navigate to the Download Center and download the super models compatible with Mech-DLK.

Finetune super models

Open Mech-DLK, select “Instance Segmentation”, and follow the instructions in the Mech-DLK Software Manual.

Once the finetuning of the super model is completed, you can proceed to export the super model as a super model package. Subsequently, you can import this super model package into Mech-Vision for inference purposes.

FAQs about recognition

1. How to avoid incorrect recognition?

To prevent incorrect recognition, consider the following steps:

- Keep the lighting conditions on-site stable to prevent capturing images that are overexposed, underexposed, or shadowed. When the lighting conditions are unstable, the sack edges in captured images will be unclear, which affects the stability of deep learning.

- Adjust the camera’s white balance to prevent capturing overexposed or underexposed images.

- Do not place unrelated boxes or sacks near the pallet to prevent incorrect recognition.

- Set an appropriate region of interest (ROI).

2. How to set an appropriate ROI?

Set the ROI on the basis of the maximum pallet (full pallet) pattern. Ensure that the ROI covers the highest and lowest regions of the pallet, and the ROI should be slightly larger than the full pallet pattern. Meanwhile, the ROI should contain no interfering point clouds as much as possible.

Upon the above operation, if the stability of deep learning-based recognition does not improve, consider the following:

- Iterate the super model for the specific type of box or sack.

- Finetune the super model using data of the specific type of box or sack.

For detailed instructions, see the documentation on Super Model Iteration.