Currently, I trained the DLK by myself for the bin-picking application. I use the instance segmentation algorithm to train it. For the training, I only feed one type of item into the deep learning, but why will other foreign objects also have the matching scoring too?

Hello, I am Allen.

For your question, I assume the problem is related to database. It’s either the data you feed not completely correct or the instance marking is not correct.

For better suggestion, please post some image if possible.

Just for your information, I had input for more than 60 images for the DLK training, is the data enough?

Data size is enough for training the model to identify one type of object.

But the number of images is not only one kind aspect which can influence the DL model performance.

Thanks for your reply. And just want to continue with this question again.

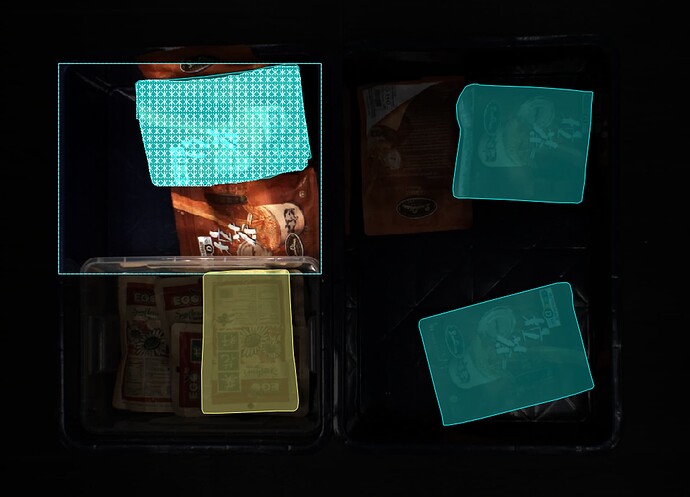

The following image is one of the images that I drew for the ROI to have the DLK training.

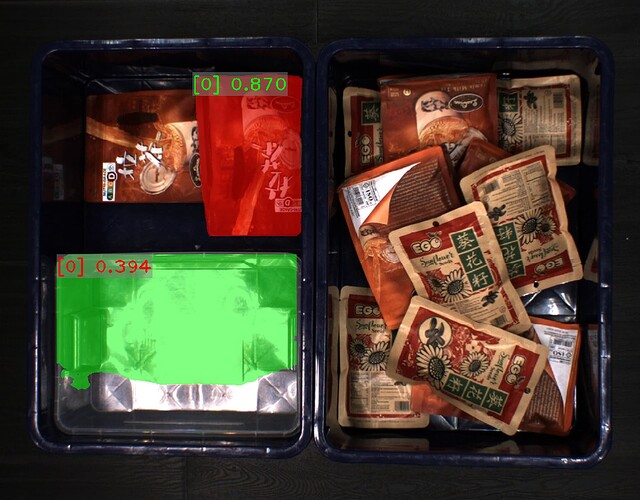

The following two images were the results of testing the DLK package with Mech Vision.

Is there any advice on this result?

For the current information provided, the conclusion is as follow.

The ROI area on the 2D image configuration should be equal to or similar in size to the small bin size. When applying the DL model in the project, ensure that the ROI configuration is the same as the ROI area used during the training. The ROI area can be changed, but the length and width of ROI configuration on the 2D image should remain similar.

Please include the empty bin data in database for train the DL model to achieve stable performance.

Moreover, the overall brightness of 2D image is a little bit low when bag is near to bin wall. It’s better to increase the 2D exposure time a little bit. If possible, it also helpful if set up external illuminant above bin to ensure the average brightness is even for the whole bin area.

Hope the information is helpful for you.

Thanks for pointing out my mistake.

I made the overall brightness of the 2D image a bit low because my bin was too reflective.

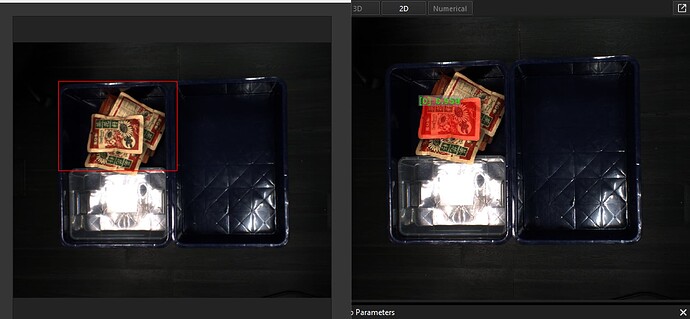

Based on your advice on the ROI area, I made the ROI area for both DLK and Vision software the same now, but I still faced the same issue. You may refer to the following attached images.

(Just for your information, I also included the empty bin data for the DL model as well)

The current instance segmentation result seems like correct.

What’s current problem now? Is it still identify two different bags as the same one?

Yes, the current problem is I only train the big packet size (tea bag) in the ROI, but even if I put the small packet size (sunflower seeds), there is still have instance segmentation result.

Have you included the small bag (different size) as train database without mark?

For the DLK, I only trained using the sample (big size) and empty that will be put into the ROI, should I include the sample (small size) to train together as well?

If the big size and small size will be in the same basket, I recommend including the Small size when you train.

In actual case, there will be no mixed scenario in the ROI, but I would like to show that if there is a foreign object inside the bin, the DL will not segment the foreign object. Is that possible to do so? Or will I need to do the training for every foreign object as well?

Yes, actually you need to train the foreign object as well. When u are training, give them different labels, in the Mech-Vision, you can filter by labels to get what you want.