For a project with two cameras, which requires merging two cameras’ point cloud, we usually need to do calibration by using the ETE method for finding the relation between two cameras (as shown below).

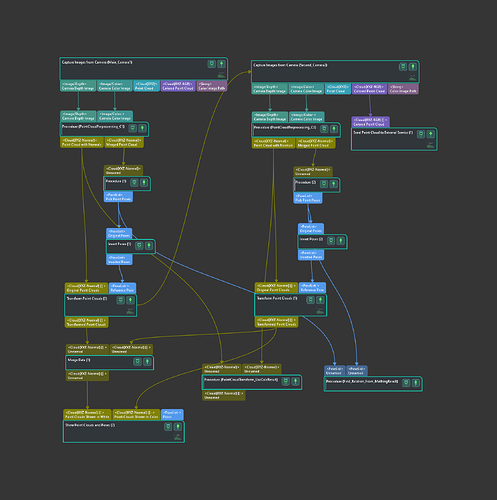

At the moment, if the robot is not available, we can use a fiducial mark object to find the relation through the 3D matching method (the first figure below is the work flow, and the second figure below is the screenshot of the Mech-Vision project).

The project’s main part is for achieving the working flow function. Both the two 3D matching procedures are using the same point cloud model and pick point configuration. Thus, based on the correct 3D matching result, we can transform the point cloud from the camera into the model frame.

By using the same method, we can transform two cameras’ point clouds into the same model frame.

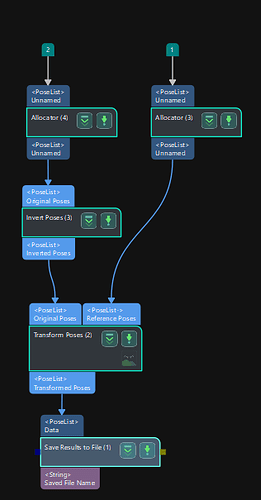

The procedure Find_Relation_From_MatchingResult is for finding the relation between two cameras by using the 3D matching result (screenshot shown below).

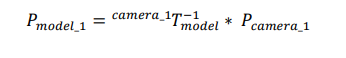

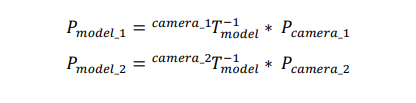

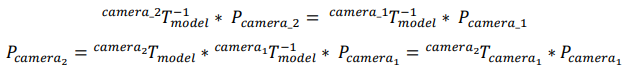

The result is calculated base on the formula below:

These two results both belong to the model frame.

The transform matrix T is the relation between two cameras.

Procedure PointCloudTransform_UseCalcResult is for transforming camera 2 's point cloud into camera 1 by using the two camera’s relation, as shown below.