During deep learning model package inference, if the interval between two adjacent inferences is short, the inference time is stable. When such interval is long, however, time fluctuation will occur. This phenomenon is likely to occur when you use Mech-Vision for deployment because Mech-Vision still needs to run other Steps during the interval.

Follow the steps below to fix this problem.

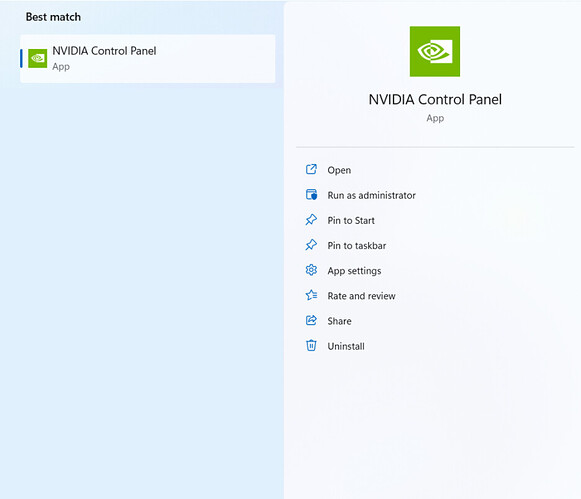

Step 1

Search for NVIDIA Control Panel from the Start menu and click to open it.

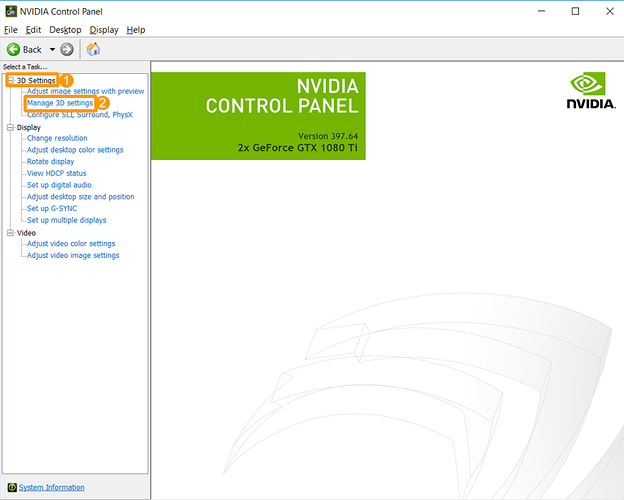

Step 2

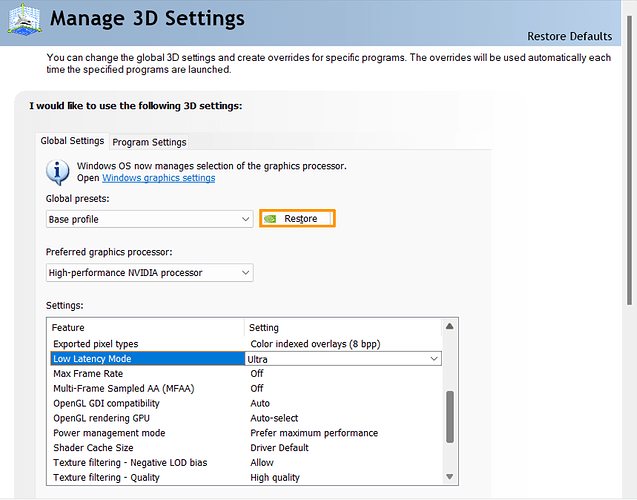

Select 3D Settings > Manage 3D Settings.

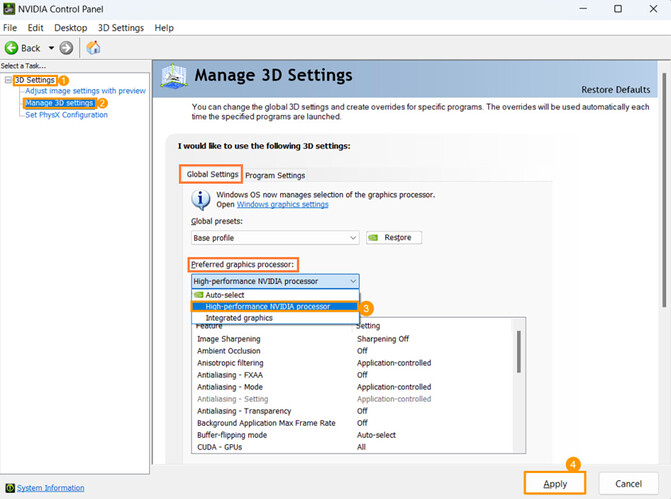

For computers with integrated graphics

Go to Global Settings and set Preferred graphics processor to High-performance NVIDIA processor.

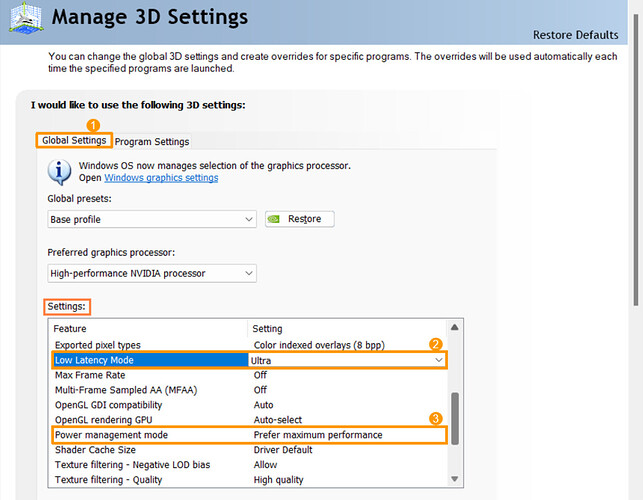

Step 3

From Global Settings > Settings, set Low Latency Mode to Ultra; set Power management mode to Prefer maximum performance.

Step 4

Search for Command Prompt from the Start menu and right-click to select Run as administrator.

Step 5

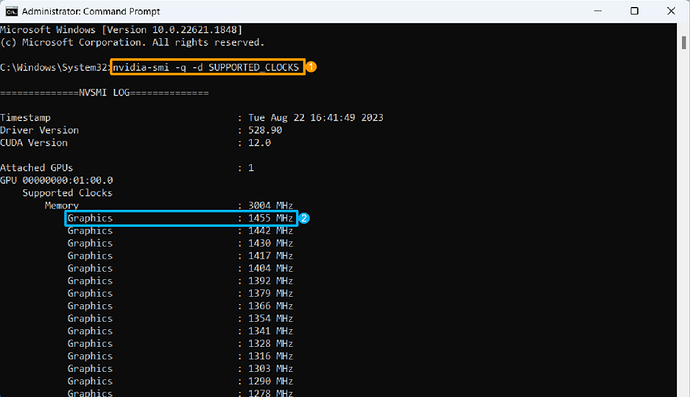

Type nvidia-smi -q -d SUPPORTED_CLOCKS and press ENTER. Note down the number (as shown in the blue box below) according to the actual situation. In this case, the number is 1455.

Step 6

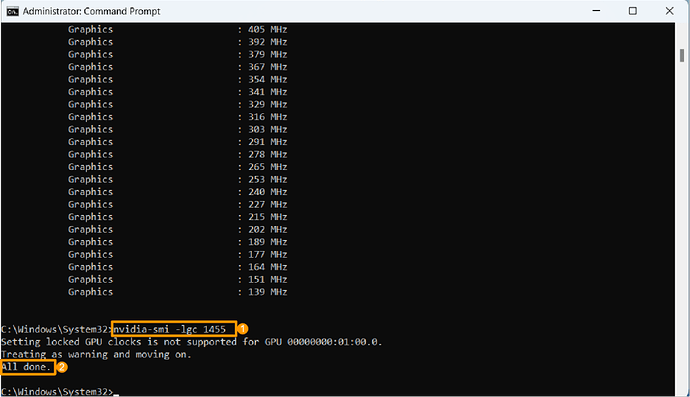

Type nvidia-smi -lgc xxxx, where xxxx denotes the number in the blue box, and press Enter.

Step 7

If the message All done appears, restart your computer to ensure that all settings are valid.

==========================================================

How to restore to default settings

First, click the Restore button on the panel. Then, run Command Prompt as administrator (Step 4) and type nvidia-smi -rgc. After that, restart your computer.

- This is helpful.

- This does not solve my issue.